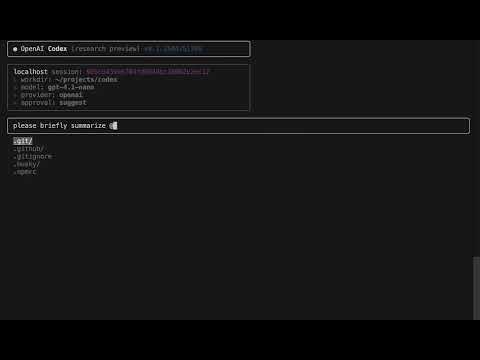

Agent wouldn't "see" attached images and would instead try to use the

view_file tool:

<img width="1516" height="504" alt="image"

src="https://github.com/user-attachments/assets/68a705bb-f962-4fc1-9087-e932a6859b12"

/>

In this PR, we wrap image content items in XML tags with the name of

each image (now just a numbered name like `[Image #1]`), so that the

model can understand inline image references (based on name). We also

put the image content items above the user message which the model seems

to prefer (maybe it's more used to definitions being before references).

We also tweak the view_file tool description which seemed to help a bit

Results on a simple eval set of images:

Before

<img width="980" height="310" alt="image"

src="https://github.com/user-attachments/assets/ba838651-2565-4684-a12e-81a36641bf86"

/>

After

<img width="918" height="322" alt="image"

src="https://github.com/user-attachments/assets/10a81951-7ee6-415e-a27e-e7a3fd0aee6f"

/>

```json

[

{

"id": "single_describe",

"prompt": "Describe the attached image in one sentence.",

"images": ["image_a.png"]

},

{

"id": "single_color",

"prompt": "What is the dominant color in the image? Answer with a single color word.",

"images": ["image_b.png"]

},

{

"id": "orientation_check",

"prompt": "Is the image portrait or landscape? Answer in one sentence.",

"images": ["image_c.png"]

},

{

"id": "detail_request",

"prompt": "Look closely at the image and call out any small details you notice.",

"images": ["image_d.png"]

},

{

"id": "two_images_compare",

"prompt": "I attached two images. Are they the same or different? Briefly explain.",

"images": ["image_a.png", "image_b.png"]

},

{

"id": "two_images_captions",

"prompt": "Provide a short caption for each image (Image 1, Image 2).",

"images": ["image_c.png", "image_d.png"]

},

{

"id": "multi_image_rank",

"prompt": "Rank the attached images from most colorful to least colorful.",

"images": ["image_a.png", "image_b.png", "image_c.png"]

},

{

"id": "multi_image_choice",

"prompt": "Which image looks more vibrant? Answer with 'Image 1' or 'Image 2'.",

"images": ["image_b.png", "image_d.png"]

}

]

```

As explained in `codex-rs/core/BUILD.bazel`, including the repo's own

`AGENTS.md` is a hack to get some tests passing. We should fix this

properly, but I wanted to put stake in the ground ASAP to get `just

bazel-remote-test` working and then add a job to `bazel.yml` to ensure

it keeps working.

As noted in the comment, this was causing a problem for me locally

because Sapling backed up some files under `.git/sl` named `BUILD.bazel`

and so Bazel tried to parse them.

It's a bit surprising that Bazel does not ignore `.git` out of the box

such that you have to opt-in to considering it rather than opting-out.

Add model provider info to /status if non-default

Enterprises are running Codex and migrating between proxied / API key

auth and SIWC. If you accidentally run Codex with `OPENAI_BASE_URL=...`,

which is surprisingly easy to do, we don't tend to surface this anywhere

and it may lead to breakage. One suggestion was to include this

information in `/status`:

<img width="477" height="157" alt="Screenshot 2026-01-09 at 15 45 34"

src="https://github.com/user-attachments/assets/630ce68f-c856-4a2b-a004-7df2fbe5de93"

/>

### Motivation

- Avoid placing PATH entries under the system temp directory by creating

the helper directory under `CODEX_HOME` instead of

`std::env::temp_dir()`.

- Fail fast on unsafe configuration by rejecting `CODEX_HOME` values

that live under the system temp root to prevent writable PATH entries.

### Testing

- Ran `just fmt`, which completed with a non-blocking

`imports_granularity` warning.

- Ran `just fix -p codex-arg0` (Clippy fixes) which completed

successfully.

- Ran `cargo test -p codex-arg0` and the test run completed

successfully.

This PR configures Codex CLI so it can be built with

[Bazel](https://bazel.build) in addition to Cargo. The `.bazelrc`

includes configuration so that remote builds can be done using

[BuildBuddy](https://www.buildbuddy.io).

If you are familiar with Bazel, things should work as you expect, e.g.,

run `bazel test //... --keep-going` to run all the tests in the repo,

but we have also added some new aliases in the `justfile` for

convenience:

- `just bazel-test` to run tests locally

- `just bazel-remote-test` to run tests remotely (currently, the remote

build is for x86_64 Linux regardless of your host platform). Note we are

currently seeing the following test failures in the remote build, so we

still need to figure out what is happening here:

```

failures:

suite::compact::manual_compact_twice_preserves_latest_user_messages

suite::compact_resume_fork::compact_resume_after_second_compaction_preserves_history

suite::compact_resume_fork::compact_resume_and_fork_preserve_model_history_view

```

- `just build-for-release` to build release binaries for all

platforms/architectures remotely

To setup remote execution:

- [Create a buildbuddy account](https://app.buildbuddy.io/) (OpenAI

employees should also request org access at

https://openai.buildbuddy.io/join/ with their `@openai.com` email

address.)

- [Copy your API key](https://app.buildbuddy.io/docs/setup/) to

`~/.bazelrc` (add the line `build

--remote_header=x-buildbuddy-api-key=YOUR_KEY`)

- Use `--config=remote` in your `bazel` invocations (or add `common

--config=remote` to your `~/.bazelrc`, or use the `just` commands)

## CI

In terms of CI, this PR introduces `.github/workflows/bazel.yml`, which

uses Bazel to run the tests _locally_ on Mac and Linux GitHub runners

(we are working on supporting Windows, but that is not ready yet). Note

that the failures we are seeing in `just bazel-remote-test` do not occur

on these GitHub CI jobs, so everything in `.github/workflows/bazel.yml`

is green right now.

The `bazel.yml` uses extra config in `.github/workflows/ci.bazelrc` so

that macOS CI jobs build _remotely_ on Linux hosts (using the

`docker://docker.io/mbolin491/codex-bazel` Docker image declared in the

root `BUILD.bazel`) using cross-compilation to build the macOS

artifacts. Then these artifacts are downloaded locally to GitHub's macOS

runner so the tests can be executed natively. This is the relevant

config that enables this:

```

common:macos --config=remote

common:macos --strategy=remote

common:macos --strategy=TestRunner=darwin-sandbox,local

```

Because of the remote caching benefits we get from BuildBuddy, these new

CI jobs can be extremely fast! For example, consider these two jobs that

ran all the tests on Linux x86_64:

- Bazel 1m37s

https://github.com/openai/codex/actions/runs/20861063212/job/59940545209?pr=8875

- Cargo 9m20s

https://github.com/openai/codex/actions/runs/20861063192/job/59940559592?pr=8875

For now, we will continue to run both the Bazel and Cargo jobs for PRs,

but once we add support for Windows and running Clippy, we should be

able to cutover to using Bazel exclusively for PRs, which should still

speed things up considerably. We will probably continue to run the Cargo

jobs post-merge for commits that land on `main` as a sanity check.

Release builds will also continue to be done by Cargo for now.

Earlier attempt at this PR: https://github.com/openai/codex/pull/8832

Earlier attempt to add support for Buck2, now abandoned:

https://github.com/openai/codex/pull/8504

---------

Co-authored-by: David Zbarsky <dzbarsky@gmail.com>

Co-authored-by: Michael Bolin <mbolin@openai.com>

Fixes#2558

Codex uses alternate screen mode (CSI 1049) which, per xterm spec,

doesn't support scrollback. Zellij follows this strictly, so users can't

scroll back through output.

**Changes:**

- Add `tui.alternate_screen` config: `auto` (default), `always`, `never`

- Add `--no-alt-screen` CLI flag

- Auto-detect Zellij and skip alt screen (uses existing `ZELLIJ` env var

detection)

**Usage:**

```bash

# CLI flag

codex --no-alt-screen

# Or in config.toml

[tui]

alternate_screen = "never"

```

With default `auto` mode, Zellij users get working scrollback without

any config changes.

---------

Co-authored-by: Josh McKinney <joshka@openai.com>

Some enterprises do not want their users to be able to `/feedback`.

<img width="395" height="325" alt="image"

src="https://github.com/user-attachments/assets/2dae9c0b-20c3-4a15-bcd3-0187857ebbd8"

/>

Adds to `config.toml`:

```toml

[feedback]

enabled = false

```

I've deliberately decided to:

1. leave other references to `/feedback` (e.g. in the interrupt message,

tips of the day) unchanged. I think we should continue to promote the

feature even if it is not usable currently.

2. leave the `/feedback` menu item selectable and display an error

saying it's disabled, rather than remove the menu item (which I believe

would raise more questions).

but happy to discuss these.

This will be followed by a change to requirements.toml that admins can

use to force the value of feedback.enabled.

**Motivation**

The `originator` header is important for codex-backend’s Responses API

proxy because it identifies the real end client (codex cli, codex vscode

extension, codex exec, future IDEs) and is used to categorize requests

by client for our enterprise compliance API.

Today the `originator` header is set by either:

- the `CODEX_INTERNAL_ORIGINATOR_OVERRIDE` env var (our VSCode extension

does this)

- calling `set_default_originator()` which sets a global immutable

singleton (`codex exec` does this)

For `codex app-server`, we want the `initialize` JSON-RPC request to set

that header because it is a natural place to do so. Example:

```json

{

"method": "initialize",

"id": 0,

"params": {

"clientInfo": {

"name": "codex_vscode",

"title": "Codex VS Code Extension",

"version": "0.1.0"

}

}

}

```

and when app-server receives that request, it can call

`set_default_originator()`. This is a much more natural interface than

asking third party developers to set an env var.

One hiccup is that `originator()` reads the global singleton and locks

in the value, preventing a later `set_default_originator()` call from

setting it. This would be fine but is brittle, since any codepath that

calls `originator()` before app-server can process an `initialize`

JSON-RPC call would prevent app-server from setting it. This was

actually the case with OTEL initialization which runs on boot, but I

also saw this behavior in certain tests.

Instead, what we now do is:

- [unchanged] If `CODEX_INTERNAL_ORIGINATOR_OVERRIDE` env var is set,

`originator()` would return that value and `set_default_originator()`

with some other value does NOT override it.

- [new] If no env var is set, `originator()` would return the default

value which is `codex_cli_rs` UNTIL `set_default_originator()` is called

once, in which case it is set to the new value and becomes immutable.

Later calls to `set_default_originator()` returns

`SetOriginatorError::AlreadyInitialized`.

**Other notes**

- I updated `codex_core::otel_init::build_provider` to accepts a service

name override, and app-server sends a hardcoded `codex_app_server`

service name to distinguish it from `codex_cli_rs` used by default (e.g.

TUI).

**Next steps**

- Update VSCE to set the proper value for `clientInfo.name` on

`initialize` and drop the `CODEX_INTERNAL_ORIGINATOR_OVERRIDE` env var.

- Delete support for `CODEX_INTERNAL_ORIGINATOR_OVERRIDE` in codex-rs.

This is a proposed fix for #8912

Information provided by Codex:

no_proxy means “don’t use any system proxy settings for this client,”

even if macOS has proxies configured in System Settings or via

environment. On macOS, reqwest’s proxy discovery can call into the

system-configuration framework; that’s the code path that was panicking

with “Attempted to create a NULL object.” By forcing a direct connection

for the OAuth discovery request, we avoid that proxy-resolution path

entirely, so the system-configuration crate never gets invoked and the

panic disappears.

Effectively:

With proxies: reqwest asks the OS for proxy config →

system-configuration gets touched → panic.

With no_proxy: reqwest skips proxy lookup → no system-configuration call

→ no panic.

So the fix doesn’t change any MCP protocol behavior; it just prevents

the OAuth discovery probe from touching the macOS proxy APIs that are

crashing in the reported environment.

This fix changes behavior for the OAuth discovery probe used in codex

mcp list/auth status detection. With no_proxy, that probe won’t use

system or env proxy settings, so:

If a server is only reachable via a proxy, the discovery call may fail

and we’ll show auth as Unsupported/NotLoggedIn incorrectly.

If the server is reachable directly (common case), behavior is

unchanged.

As an alternative, we could try to get a fix into the

[system-configuration](https://github.com/mullvad/system-configuration-rs)

library. It looks like this library is still under development but has

slow release pace.

As explained in https://github.com/openai/codex/issues/8945 and

https://github.com/openai/codex/issues/8472, there are legitimate cases

where users expect processes spawned by Codex to inherit environment

variables such as `LD_LIBRARY_PATH` and `DYLD_LIBRARY_PATH`, where

failing to do so can cause significant performance issues.

This PR removes the use of

`codex_process_hardening::pre_main_hardening()` in Codex CLI (which was

added not in response to a known security issue, but because it seemed

like a prudent thing to do from a security perspective:

https://github.com/openai/codex/pull/4521), but we will continue to use

it in `codex-responses-api-proxy`. At some point, we probably want to

introduce a slightly different version of

`codex_process_hardening::pre_main_hardening()` in Codex CLI that

excludes said environment variables from the Codex process itself, but

continues to propagate them to subprocesses.

Handle null tool arguments in the MCP resource handler so optional

resource tools accept null without failing, preserving normal JSON

parsing for non-null payloads and improving robustness when models emit

null; this avoids spurious argument parse errors for list/read MCP

resource calls.

Elevated Sandbox NUX:

* prompt for elevated sandbox setup when agent mode is selected (via

/approvals or at startup)

* prompt for degraded sandbox if elevated setup is declined or fails

* introduce /elevate-sandbox command to upgrade from degraded

experience.

This seems to be necessary to get the Bazel builds on ARM Linux to go

green on https://github.com/openai/codex/pull/8875.

I don't feel great about timeout-whack-a-mole, but we're still learning

here...

Fix flakiness of CI test:

https://github.com/openai/codex/actions/runs/20350530276/job/58473691434?pr=8282

This PR does two things:

1. move the flakiness test to use responses API instead of chat

completion API

2. make mcp_process agnostic to the order of

responses/notifications/requests that come in, by buffering messages not

read

I have seen this test flake out sometimes when running the macOS build

using Bazel in CI: https://github.com/openai/codex/pull/8875. Perhaps

Bazel runs with greater parallelism, inducing a heavier load, causing an

issue?

Historically we started with a CodexAuth that knew how to refresh it's

own tokens and then added AuthManager that did a different kind of

refresh (re-reading from disk).

I don't think it makes sense for both `CodexAuth` and `AuthManager` to

be mutable and contain behaviors.

Move all refresh logic into `AuthManager` and keep `CodexAuth` as a data

object.

This updates core shell environment policy handling to match Windows

case-insensitive variable names and adds a Windows-only regression test,

so Path/TEMP are no longer dropped when inherit=core.

Fix flakiness of CI tests:

https://github.com/openai/codex/actions/runs/20350530276/job/58473691443?pr=8282

This PR does two things:

1. test with responses API instead of chat completions API in

thread_resume tests;

2. have a new responses API fixture that mocks out arbitrary numbers of

responses API calls (including no calls) and have the same repeated

response.

Tested by CI

**Before:**

```

Error loading configuration: value `Never` is not in the allowed set [OnRequest]

```

**After:**

```

Error loading configuration: invalid value for `approval_policy`: `Never` is not in the

allowed set [OnRequest] (set by MDM com.openai.codex:requirements_toml_base64)

```

Done by introducing a new struct `ConfigRequirementsWithSources` onto

which we `merge_unset_fields` now. Also introduces a pair of requirement

value and its `RequirementSource` (inspired by `ConfigLayerSource`):

```rust

pub struct Sourced<T> {

pub value: T,

pub source: RequirementSource,

}

```

## Summary

- avoid setting a new process group when stdio is inherited (keeps child

in foreground PG)

- keep process-group isolation when stdio is redirected so killpg

cleanup still works

- prevents macOS job-control SIGTTIN stops that look like hangs after

output

## Testing

- `cargo build -p codex-cli`

- `GIT_CONFIG_GLOBAL=/dev/null GIT_CONFIG_NOSYSTEM=1

CARGO_BIN_EXE_codex=/Users/denis/Code/codex/codex-rs/target/debug/codex

/opt/homebrew/bin/timeout 30m cargo test -p codex-core -p codex-exec`

## Context

This fixes macOS sandbox hangs for commands like `elixir -v` / `erl

-noshell`, where the child was moved into a new process group while

still attached to the controlling TTY. See issue #8690.

## Authorship & collaboration

- This change and analysis were authored by **Codex** (AI coding agent).

- Human collaborator: @seeekr provided repro environment, context, and

review guidance.

- CLI used: `codex-cli 0.77.0`.

- Model: `gpt-5.2-codex (xhigh)`.

Co-authored-by: Eric Traut <etraut@openai.com>

Include project-level AGENTS.md and skills in /review sessions so the

review sub-agent uses the same instruction pipeline as standard runs,

keeping reviewer context aligned with normal sessions.

Sort list_dir entries before applying offset/limit so pagination matches

the displayed order, update pagination/truncation expectations, and add

coverage for sorted pagination. This ensures stable, predictable

directory pages when list_dir is enabled.

Add metrics capabilities to Codex. The `README.md` is up to date.

This will not be merged with the metrics before this PR of course:

https://github.com/openai/codex/pull/8350

## Summary

This PR builds _heavily_ on the work from @occurrent in #8021 - I've

only added a small fix, added additional tests, and propagated the

changes to tui2.

From the original PR:

> On Windows, Codex relies on PasteBurst for paste detection because

bracketed paste is not reliably available via crossterm.

>

> When pasted content starts with non-ASCII characters, input is routed

through handle_non_ascii_char, which bypasses the normal paste burst

logic. This change extends the paste burst window for that path, which

should ensure that Enter is correctly grouped as part of the paste.

## Testing

- [x] tested locally cross-platform

- [x] added regression tests

---------

Co-authored-by: occur <occurring@outlook.com>

https://github.com/openai/codex/pull/8879 introduced the

`find_resource!` macro, but now that I am about to use it in more

places, I realize that it should take care of this normalization case

for callers.

Note the `use $crate::path_absolutize::Absolutize;` line is there so

that users of `find_resource!` do not have to explicitly include

`path-absolutize` to their own `Cargo.toml`.

To support Bazelification in https://github.com/openai/codex/pull/8875,

this PR introduces a new `find_resource!` macro that we use in place of

our existing logic in tests that looks for resources relative to the

compile-time `CARGO_MANIFEST_DIR` env var.

To make this work, we plan to add the following to all `rust_library()`

and `rust_test()` Bazel rules in the project:

```

rustc_env = {

"BAZEL_PACKAGE": native.package_name(),

},

```

Our new `find_resource!` macro reads this value via

`option_env!("BAZEL_PACKAGE")` so that the Bazel package _of the code

using `find_resource!`_ is injected into the code expanded from the

macro. (If `find_resource()` were a function, then

`option_env!("BAZEL_PACKAGE")` would always be

`codex-rs/utils/cargo-bin`, which is not what we want.)

Note we only consider the `BAZEL_PACKAGE` value when the `RUNFILES_DIR`

environment variable is set at runtime, indicating that the test is

being run by Bazel. In this case, we have to concatenate the runtime

`RUNFILES_DIR` with the compile-time `BAZEL_PACKAGE` value to build the

path to the resource.

In testing this change, I discovered one funky edge case in

`codex-rs/exec-server/tests/common/lib.rs` where we have to _normalize_

(but not canonicalize!) the result from `find_resource!` because the

path contains a `common/..` component that does not exist on disk when

the test is run under Bazel, so it must be semantically normalized using

the [`path-absolutize`](https://crates.io/crates/path-absolutize) crate

before it is passed to `dotslash fetch`.

Because this new behavior may be non-obvious, this PR also updates

`AGENTS.md` to make humans/Codex aware that this API is preferred.

The Bazelification work in-flight over at

https://github.com/openai/codex/pull/8832 needs this fix so that Bazel

can find the path to the DotSlash file for `bash`.

With this change, the following almost works:

```

bazel test --test_output=errors //codex-rs/exec-server:exec-server-all-test

```

That is, now the `list_tools` test passes, but

`accept_elicitation_for_prompt_rule` still fails because it runs

Seatbelt itself, so it needs to be run outside Bazel's local sandboxing.

Skills discovery now follows symlink entries for SkillScope::User

($CODEX_HOME/skills) and SkillScope::Admin (e.g. /etc/codex/skills).

Added cycle protection: directories are canonicalized and tracked in a

visited set to prevent infinite traversal from circular links.

Added per-root traversal limits to avoid accidentally scanning huge

trees:

- max depth: 6

- max directories: 2000 (logs a warning if truncated)

For now, symlink stat failures and traversal truncation are logged

rather than surfaced as UI “invalid SKILL.md” warnings.

<img width="763" height="349" alt="Screenshot 2026-01-07 at 18 37 59"

src="https://github.com/user-attachments/assets/569d01cb-ea91-4113-889b-ba74df24adaf"

/>

It may not make sense to use the `/model` menu with a custom

OPENAI_BASE_URL. But some model proxies may support it, so we shouldn't

disable it completely. A warning is a reasonable compromise.

Fixes#8609

# Summary

Emphasize single-line name/description values and quoting when values

could be interpreted as YAML syntax.

# Testing

Not run (skill-only change.)

Adds a new feature

`enable_request_compression` that will compress using zstd requests to

the codex-backend. Currently only enabled for codex-backend so only enabled for openai providers when using chatgpt::auth even when the feature is enabled

Added a new info log line too for evaluating the compression ratio and

overhead off compressing before requesting. You can enable with

`RUST_LOG=$RUST_LOG,codex_client::transport=info`

```

2026-01-06T00:09:48.272113Z INFO codex_client::transport: Compressed request body with zstd pre_compression_bytes=28914 post_compression_bytes=11485 compression_duration_ms=0

```

We used to override truncation policy by comparing model info vs config

value in context manager. A better way to do it is to construct model

info using the config value

**Summary**

This PR makes “ApprovalDecision::AcceptForSession / don’t ask again this

session” actually work for `apply_patch` approvals by caching approvals

based on absolute file paths in codex-core, properly wiring it through

app-server v2, and exposing the choice in both TUI and TUI2.

- This brings `apply_patch` calls to be at feature-parity with general

shell commands, which also have a "Yes, and don't ask again" option.

- This also fixes VSCE's "Allow this session" button to actually work.

While we're at it, also split the app-server v2 protocol's

`ApprovalDecision` enum so execpolicy amendments are only available for

command execution approvals.

**Key changes**

- Core: per-session patch approval allowlist keyed by absolute file

paths

- Handles multi-file patches and renames/moves by recording both source

and destination paths for `Update { move_path: Some(...) }`.

- Extend the `Approvable` trait and `ApplyPatchRuntime` to work with

multiple keys, because an `apply_patch` tool call can modify multiple

files. For a request to be auto-approved, we will need to check that all

file paths have been approved previously.

- App-server v2: honor AcceptForSession for file changes

- File-change approval responses now map AcceptForSession to

ReviewDecision::ApprovedForSession (no longer downgraded to plain

Approved).

- Replace `ApprovalDecision` with two enums:

`CommandExecutionApprovalDecision` and `FileChangeApprovalDecision`

- TUI / TUI2: expose “don’t ask again for these files this session”

- Patch approval overlays now include a third option (“Yes, and don’t

ask again for these files this session (s)”).

- Snapshot updates for the approval modal.

**Tests added/updated**

- Core:

- Integration test that proves ApprovedForSession on a patch skips the

next patch prompt for the same file

- App-server:

- v2 integration test verifying

FileChangeApprovalDecision::AcceptForSession works properly

**User-visible behavior**

- When the user approves a patch “for session”, future patches touching

only those previously approved file(s) will no longer prompt gain during

that session (both via app-server v2 and TUI/TUI2).

**Manual testing**

Tested both TUI and TUI2 - see screenshots below.

TUI:

<img width="1082" height="355" alt="image"

src="https://github.com/user-attachments/assets/adcf45ad-d428-498d-92fc-1a0a420878d9"

/>

TUI2:

<img width="1089" height="438" alt="image"

src="https://github.com/user-attachments/assets/dd768b1a-2f5f-4bd6-98fd-e52c1d3abd9e"

/>

> // todo(aibrahim): why are we passing model here while it can change?

we update it on each turn with `.with_model`

> //TODO(aibrahim): run CI in release mode.

although it's good to have, release builds take double the time tests

take.

> // todo(aibrahim): make this async function

we figured out another way of doing this sync

- Merge ModelFamily into ModelInfo

- Remove logic for adding instructions to apply patch

- Add compaction limit and visible context window to `ModelInfo`

See https://rustsec.org/advisories/RUSTSEC-2026-0002.

Though our `ratatui` fork has a transitive dep on an older version of

the `lru` crate, so to get CI green ASAP, this PR also adds an exception

to `deny.toml` for `RUSTSEC-2026-0002`, but hopefully this will be

short-lived.

Fixes CodexExec to avoid missing early process exits by registering the

exit handler up front and deferring the error until after stdout is

drained, and adds a regression test that simulates a fast-exit child

while still producing output so hangs are caught.

Handle /review <instructions> in the TUI and TUI2 by routing it as a

custom review command instead of plain text, wiring command dispatch and

adding composer coverage so typing /review text starts a review directly

rather than posting a message. User impact: /review with arguments now

kicks off the review flow, previously it would just forward as a plain

command and not actually start a review.

Fixes apply.rs path parsing so

- quoted diff headers are tokenized and extracted correctly,

- /dev/null headers are ignored before prefix stripping to avoid bogus

dev/null paths, and

- git apply output paths are unescaped from C-style quoting.

**Why**

This prevents potentially missed staging and misclassified paths when

applying or reverting patches, which could lead to incorrect behavior

for repos with spaces or escaped characters in filenames.

**Impact**

I checked and this is only used in the cloud tasks support and `codex

apply <task_id>` flow.

Done to avoid spammy warnings to end up in the model context without

having to switch to nightly

```

Warning: can't set `imports_granularity = Item`, unstable features are only available in nightly channel.

```

Set login=false for the shell tool in the timing-based parallelism test

so it does not depend on slow user login shells, making the test

deterministic without user-facing changes. This prevents occasional

flakes when running locally.

With `config.toml`:

```

model = "gpt-5.1-codex"

```

(where `gpt-5.1-codex` has `show_in_picker: false` in

[`model_presets.rs`](https://github.com/openai/codex/blob/main/codex-rs/core/src/models_manager/model_presets.rs);

this happens if the user hasn't used codex in a while so they didn't see

the popup before their model was changed to `show_in_picker: false`)

The upgrade picker used to not show (because `gpt-5.1-codex` was

filtered out of the model list in code). Now, the filtering is done

downstream in tui and app-server, so the model upgrade popup shows:

<img width="1503" height="227" alt="Screenshot 2026-01-06 at 5 04 37 PM"

src="https://github.com/user-attachments/assets/26144cc2-0b3f-4674-ac17-e476781ec548"

/>

Use the contents of the commit message from the commit associated with

the tag (that contains the version bump) as the release notes by writing

them to a file and then specifying the file as the `body_path` of

`softprops/action-gh-release@v2`.

Add `web_search_cached` feature to config. Enables `web_search` tool

with access only to cached/indexed results (see

[docs](https://platform.openai.com/docs/guides/tools-web-search#live-internet-access)).

This takes precedence over the existing `web_search_request`, which

continues to enable `web_search` over live results as it did before.

`web_search_cached` is disabled for review mode, as `web_search_request`

is.

Add `thread/rollback` to app-server to support IDEs undo-ing the last N

turns of a thread.

For context, an IDE partner will be supporting an "undo" capability

where the IDE (the app-server client) will be responsible for reverting

the local changes made during the last turn. To support this well, we

also need a way to drop the last turn (or more generally, the last N

turns) from the agent's context. This is what `thread/rollback` does.

**Core idea**: A Thread rollback is represented as a persisted event

message (EventMsg::ThreadRollback) in the rollout JSONL file, not by

rewriting history. On resume, both the model's context (core replay) and

the UI turn list (app-server v2's thread history builder) apply these

markers so the pruned history is consistent across live conversations

and `thread/resume`.

Implementation notes:

- Rollback only affects agent context and appends to the rollout file;

clients are responsible for reverting files on disk.

- If a thread rollback is currently in progress, subsequent

`thread/rollback` calls are rejected.

- Because we use `CodexConversation::submit` and codex core tracks

active turns, returning an error on concurrent rollbacks is communicated

via an `EventMsg::Error` with a new variant

`CodexErrorInfo::ThreadRollbackFailed`. app-server watches for that and

sends the BAD_REQUEST RPC response.

Tests cover thread rollbacks in both core and app-server, including when

`num_turns` > existing turns (which clears all turns).

**Note**: this explicitly does **not** behave like `/undo` which we just

removed from the CLI, which does the opposite of what `thread/rollback`

does. `/undo` reverts local changes via ghost commits/snapshots and does

not modify the agent's context / conversation history.

### Motivation

- Fix a visual bug where transcript text could bleed through the

on-screen copy "pill" overlay.

- Ensure the copy affordance fully covers the underlying buffer so the

pill background is solid and consistent with styling.

- Document the approach in-code to make the background-clearing

rationale explicit.

### Description

- Clear the pill area before drawing by iterating `Rect::positions()`

and calling `cell.set_symbol(" ")` and `cell.set_style(base_style)` in

`render_copy_pill` in `transcript_copy_ui.rs`.

- Added an explanatory comment for why the pill background is explicitly

cleared.

- Added a unit test `copy_pill_clears_background` and committed the

corresponding snapshot file to validate the rendering behavior.

### Testing

- Ran `just fmt` (formatting completed; non-blocking environment warning

may appear).

- Ran `just fix -p codex-tui2` to apply lints/fixes (completed).

- Ran `cargo test -p codex-tui2` and all tests passed (snapshot updated

and tests succeeded).

------

[Codex

Task](https://chatgpt.com/codex/tasks/task_i_695c9b23e9b8832997d5a457c4d83410)

Added an agent control plane that lets sessions spawn or message other

conversations via `AgentControl`.

`AgentBus` (core/src/agent/bus.rs) keeps track of the last known status

of a conversation.

ConversationManager now holds shared state behind an Arc so AgentControl

keeps only a weak back-reference, the goal is just to avoid explicit

cycle reference.

Follow-ups:

* Build a small tool in the TUI to be able to see every agent and send

manual message to each of them

* Handle approval requests in this TUI

* Add tools to spawn/communicate between agents (see related design)

* Define agent types

Force an announcement tooltip in the CLI. This query the gh repo on this

[file](https://raw.githubusercontent.com/openai/codex/main/announcement_tip.toml)

which contains announcements in TOML looking like this:

```

# Example announcement tips for Codex TUI.

# Each [[announcements]] entry is evaluated in order; the last matching one is shown.

# Dates are UTC, formatted as YYYY-MM-DD. The from_date is inclusive and the to_date is exclusive.

# version_regex matches against the CLI version (env!("CARGO_PKG_VERSION")); omit to apply to all versions.

# target_app specify which app should display the announcement (cli, vsce, ...).

[[announcements]]

content = "Welcome to Codex! Check out the new onboarding flow."

from_date = "2024-10-01"

to_date = "2024-10-15"

version_regex = "^0\\.0\\.0$"

target_app = "cli"

```

To make this efficient, the announcement is queried on a best effort

basis at the launch of the CLI (no refresh made after this).

This is done in an async way and we display the announcement (with 100%

probability) iff the announcement is available, the cache is correctly

warmed and there is a matching announcement (matching is recomputed for

each new session).

Fixes ReadinessFlag::subscribe to avoid handing out token 0 or duplicate

tokens on i32 wrap-around, adds regression tests, and prevents readiness

gates from getting stuck waiting on an unmarkable or mis-authorized

token.

Background

Streaming assistant prose in tui2 was being rendered with viewport-width

wrapping during streaming, then stored in history cells as already split

`Line`s. Those width-derived breaks became indistinguishable from hard

newlines, so the transcript could not "un-split" on resize. This also

degraded copy/paste, since soft wraps looked like hard breaks.

What changed

- Introduce width-agnostic `MarkdownLogicalLine` output in

`tui2/src/markdown_render.rs`, preserving markdown wrap semantics:

initial/subsequent indents, per-line style, and a preformatted flag.

- Update the streaming collector (`tui2/src/markdown_stream.rs`) to emit

logical lines (newline-gated) and remove any captured viewport width.

- Update streaming orchestration (`tui2/src/streaming/*`) to queue and

emit logical lines, producing `AgentMessageCell::new_logical(...)`.

- Make `AgentMessageCell` store logical lines and wrap at render time in

`HistoryCell::transcript_lines_with_joiners(width)`, emitting joiners so

copy/paste can join soft-wrap continuations correctly.

Overlay deferral

When an overlay is active, defer *cells* (not rendered `Vec<Line>`) and

render them at overlay close time. This avoids baking width-derived

wraps based on a stale width.

Tests + docs

- Add resize/reflow regression tests + snapshots for streamed agent

output.

- Expand module/API docs for the new logical-line streaming pipeline and

clarify joiner semantics.

- Align scrollback-related docs/comments with current tui2 behavior

(main draw loop does not flush queued "history lines" to the terminal).

More details

See `codex-rs/tui2/docs/streaming_wrapping_design.md` for the full

problem statement and solution approach, and

`codex-rs/tui2/docs/tui_viewport_and_history.md` for viewport vs printed

output behavior.

Trim whitespace when validating '*** Begin Patch'/'*** End Patch'

markers in codex-apply-patch so padded marker lines parse as intended,

and add regression coverage (unit + fixture scenario); this avoids

apply_patch failures when models include extra spacing. Tested with

cargo test -p codex-apply-patch.

**Motivation**

- Bring `codex exec resume` to parity with top‑level flags so global

options (git check bypass, json, model, sandbox toggles) work after the

subcommand, including when outside a git repo.

**Description**

- Exec CLI: mark `--skip-git-repo-check`, `--json`, `--model`,

`--full-auto`, and `--dangerously-bypass-approvals-and-sandbox` as

global so they’re accepted after `resume`.

- Tests: add `exec_resume_accepts_global_flags_after_subcommand` to

verify those flags work when passed after `resume`.

**Testing**

- `just fmt`

- `cargo test -p codex-exec` (pass; ran with elevated perms to allow

network/port binds)

- Manual: exercised `codex exec resume` with global flags after the

subcommand to confirm behavior.

We've seen reports that people who try to login on a remote/headless

machine will open the login link on their own machine and got errors.

Update the instructions to ask those users to use `codex login

--device-auth` instead.

<img width="1434" height="938" alt="CleanShot 2026-01-05 at 11 35 02@2x"

src="https://github.com/user-attachments/assets/2b209953-6a42-4eb0-8b55-bb0733f2e373"

/>

Adds an optional `justification` parameter to the `prefix_rule()`

execpolicy DSL so policy authors can attach human-readable rationale to

a rule. That justification is propagated through parsing/matching and

can be surfaced to the model (or approval UI) when a command is blocked

or requires approval.

When a command is rejected (or gated behind approval) due to policy, a

generic message makes it hard for the model/user to understand what went

wrong and what to do instead. Allowing policy authors to supply a short

justification improves debuggability and helps guide the model toward

compliant alternatives.

Example:

```python

prefix_rule(

pattern = ["git", "push"],

decision = "forbidden",

justification = "pushing is blocked in this repo",

)

```

If Codex tried to run `git push origin main`, now the failure would

include:

```

`git push origin main` rejected: pushing is blocked in this repo

```

whereas previously, all it was told was:

```

execpolicy forbids this command

```

The elevated sandbox creates two new Windows users - CodexSandboxOffline

and CodexSandboxOnline. This is necessary, so this PR does all that it

can to "hide" those users. It uses the registry plus directory flags (on

their home directories) to get them to show up as little as possible.

## Summary

When using device-code login with a custom issuer

(`--experimental_issuer`), Codex correctly uses that issuer for the auth

flow — but the **terminal prompt still told users to open the default

OpenAI device URL** (`https://auth.openai.com/codex/device`). That’s

confusing and can send users to the **wrong domain** (especially for

enterprise/staging issuers). This PR updates the prompt (and related

URLs) to consistently use the configured issuer. 🎯

---

## 🔧 What changed

* 🔗 **Device auth prompt link** now uses the configured issuer (instead

of a hard-coded OpenAI URL)

* 🧭 **Redirect callback URL** is derived from the same issuer for

consistency

* 🧼 Minor cleanup: normalize the issuer base URL once and reuse it

(avoids formatting quirks like trailing `/`)

---

## 🧪 Repro + Before/After

### ▶️ Command

```bash

codex login --device-auth --experimental_issuer https://auth.example.com

```

### ❌ Before (wrong link shown)

```text

1. Open this link in your browser and sign in to your account

https://auth.openai.com/codex/device

```

### ✅ After (correct link shown)

```text

1. Open this link in your browser and sign in to your account

https://auth.example.com/codex/device

```

Full example output (same as before, but with the correct URL):

```text

Welcome to Codex [v0.72.0]

OpenAI's command-line coding agent

Follow these steps to sign in with ChatGPT using device code authorization:

1. Open this link in your browser and sign in to your account

https://auth.example.com/codex/device

2. Enter this one-time code (expires in 15 minutes)

BUT6-0M8K4

Device codes are a common phishing target. Never share this code.

```

---

## ✅ Test plan

* 🟦 `codex login --device-auth` (default issuer): output remains

unchanged

* 🟩 `codex login --device-auth --experimental_issuer

https://auth.example.com`:

* prompt link points to the issuer ✅

* callback URL is derived from the same issuer ✅

* no double slashes / mismatched domains ✅

Co-authored-by: Eric Traut <etraut@openai.com>

This change improves the skills render section

- Separate the skills list from usage rules with clear subheadings

- Define skill more clearly upfront

- Remove confusing trigger/discovery wording and make reference-following guidance more actionable

Never treat .codex or .codex/.sandbox as a workspace root.

Handle write permissions to .codex/.sandbox in a single method so that

the sandbox setup/runner can write logs and other setup files to that

directory.

What changed

- Added `outputSchema` support to the app-server APIs, mirroring `codex

exec --output-schema` behavior.

- V1 `sendUserTurn` now accepts `outputSchema` and constrains the final

assistant message for that turn.

- V2 `turn/start` now accepts `outputSchema` and constrains the final

assistant message for that turn (explicitly per-turn only).

Core behavior

- `Op::UserTurn` already supported `final_output_json_schema`; now V1

`sendUserTurn` forwards `outputSchema` into that field.

- `Op::UserInput` now carries `final_output_json_schema` for per-turn

settings updates; core maps it into

`SessionSettingsUpdate.final_output_json_schema` so it applies to the

created turn context.

- V2 `turn/start` does NOT persist the schema via `OverrideTurnContext`

(it’s applied only for the current turn). Other overrides

(cwd/model/etc) keep their existing persistent behavior.

API / docs

- `codex-rs/app-server-protocol/src/protocol/v1.rs`: add `output_schema:

Option<serde_json::Value>` to `SendUserTurnParams` (serialized as

`outputSchema`).

- `codex-rs/app-server-protocol/src/protocol/v2.rs`: add `output_schema:

Option<JsonValue>` to `TurnStartParams` (serialized as `outputSchema`).

- `codex-rs/app-server/README.md`: document `outputSchema` for

`turn/start` and clarify it applies only to the current turn.

- `codex-rs/docs/codex_mcp_interface.md`: document `outputSchema` for v1

`sendUserTurn` and v2 `turn/start`.

Tests added/updated

- New app-server integration tests asserting `outputSchema` is forwarded

into outbound `/responses` requests as `text.format`:

- `codex-rs/app-server/tests/suite/output_schema.rs`

- `codex-rs/app-server/tests/suite/v2/output_schema.rs`

- Added per-turn semantics tests (schema does not leak to the next

turn):

- `send_user_turn_output_schema_is_per_turn_v1`

- `turn_start_output_schema_is_per_turn_v2`

- Added protocol wire-compat tests for the merged op:

- serialize omits `final_output_json_schema` when `None`

- deserialize works when field is missing

- serialize includes `final_output_json_schema` when `Some(schema)`

Call site updates (high level)

- Updated all `Op::UserInput { .. }` constructions to include

`final_output_json_schema`:

- `codex-rs/app-server/src/codex_message_processor.rs`

- `codex-rs/core/src/codex_delegate.rs`

- `codex-rs/mcp-server/src/codex_tool_runner.rs`

- `codex-rs/tui/src/chatwidget.rs`

- `codex-rs/tui2/src/chatwidget.rs`

- plus impacted core tests.

Validation

- `just fmt`

- `cargo test -p codex-core`

- `cargo test -p codex-app-server`

- `cargo test -p codex-mcp-server`

- `cargo test -p codex-tui`

- `cargo test -p codex-tui2`

- `cargo test -p codex-protocol`

- `cargo clippy --all-features --tests --profile dev --fix -- -D

warnings`

Load managed requirements from MDM key `requirements_toml_base64`.

Tested on my Mac (using `defaults` to set the preference, though this

would be set by MDM in production):

```

➜ codex git:(gt/mdm-requirements) defaults read com.openai.codex requirements_toml_base64 | base64 -d

allowed_approval_policies = ["on-request"]

➜ codex git:(gt/mdm-requirements) just c --yolo

cargo run --bin codex -- "$@"

Finished `dev` profile [unoptimized + debuginfo] target(s) in 0.26s

Running `target/debug/codex --yolo`

Error loading configuration: value `Never` is not in the allowed set [OnRequest]

error: Recipe `codex` failed on line 11 with exit code 1

➜ codex git:(gt/mdm-requirements) defaults delete com.openai.codex requirements_toml_base64

➜ codex git:(gt/mdm-requirements) just c --yolo

cargo run --bin codex -- "$@"

Finished `dev` profile [unoptimized + debuginfo] target(s) in 0.24s

Running `target/debug/codex --yolo`

╭──────────────────────────────────────────────────────────╮

│ >_ OpenAI Codex (v0.0.0) │

│ │

│ model: codex-auto-balanced medium /model to change │

│ directory: ~/code/codex/codex-rs │

╰──────────────────────────────────────────────────────────╯

Tip: Start a fresh idea with /new; the previous session stays in history.

```

Context

- This code parses Server-Sent Events (SSE) from the legacy Chat

Completions streaming API (wire_api = "chat").

- The upstream protocol terminates a stream with a final sentinel event:

data: [DONE].

- Some of our test stubs/helpers historically end the stream with data:

DONE (no brackets).

How this was found

- GitHub Actions on Windows failed in codex-app-server integration tests

with wiremock verification errors (expected multiple POSTs, got 1).

Diagnosis

- The job logs included: codex_api::sse::chat: Failed to parse

ChatCompletions SSE event ... data: DONE.

- eventsource_stream surfaces the sentinel as a normal SSE event; it

does not automatically close the stream.

- The parser previously attempted to JSON-decode every data: payload.

The sentinel is not JSON, so we logged and skipped it, then continued

polling.

- On servers that keep the HTTP connection open after emitting the

sentinel (notably wiremock on Windows), skipping the sentinel meant we

never emitted ResponseEvent::Completed.

- Higher layers wait for completion before progressing (emitting

approval requests and issuing follow-up model calls), so the test never

reached the subsequent requests and wiremock panicked when its

expected-call count was not met.

Fix

- Treat both data: [DONE] and data: DONE as explicit end-of-stream

sentinels.

- When a sentinel is seen, flush any pending assistant/reasoning items

and emit ResponseEvent::Completed once.

Tests

- Add a regression unit test asserting we complete on the sentinel even

if the underlying connection is not closed.

## Summary

- Add a transcript scrollbar in `tui2` using `tui-scrollbar`.

- Reserve 2 columns on the right (1 empty gap + 1 scrollbar track) and

plumb the reduced width through wrapping/selection/copy so rendering and

interactions match.

- Auto-hide the scrollbar when the transcript is pinned to the bottom

(columns remain reserved).

- Add mouse click/drag support for the scrollbar, with pointer-capture

so drags don’t fall through into transcript selection.

- Skip scrollbar hit-testing when auto-hidden to avoid an invisible

interactive region.

## Notes

- Styling is theme-aware: in light themes the thumb is darker than the

track; in dark themes it reads as an “indented” element without going

full-white.

- Pre-Ratatui 0.30 (ratatui-core split) requires a small scratch-buffer

bridge; this should simplify once we move to Ratatui 0.30.

## Testing

- `just fmt`

- `just fix -p codex-tui2 --allow-no-vcs`

- `cargo test -p codex-tui2`

The Responses API requires that all tool names conform to

'^[a-zA-Z0-9_-]+$'. This PR replaces all non-conforming characters with

`_` to ensure that they can be used.

Fixes#8174

Fixes /review base-branch prompt resolution to use the session/turn cwd

(respecting runtime cwd overrides) so merge-base/diff guidance is

computed from the intended repo; adds a regression test for cwd

overrides; tested with cargo test -p codex-core --test all

review_uses_overridden_cwd_for_base_branch_merge_base.

Fix this: https://github.com/openai/codex/issues/8479

The issue is that chat completion API expect all the tool calls in a

single assistant message and then all the tool call output in a single

response message

Bumps [tokio-stream](https://github.com/tokio-rs/tokio) from 0.1.17 to

0.1.18.

<details>

<summary>Commits</summary>

<ul>

<li><a

href="60b083b630"><code>60b083b</code></a>

chore: prepare tokio-stream 0.1.18 (<a

href="https://redirect.github.com/tokio-rs/tokio/issues/7830">#7830</a>)</li>

<li><a

href="9cc02cc88d"><code>9cc02cc</code></a>

chore: prepare tokio-util 0.7.18 (<a

href="https://redirect.github.com/tokio-rs/tokio/issues/7829">#7829</a>)</li>

<li><a

href="d2799d791b"><code>d2799d7</code></a>

task: improve the docs of <code>Builder::spawn_local</code> (<a

href="https://redirect.github.com/tokio-rs/tokio/issues/7828">#7828</a>)</li>

<li><a

href="4d4870f291"><code>4d4870f</code></a>

task: doc that task drops before JoinHandle completion (<a

href="https://redirect.github.com/tokio-rs/tokio/issues/7825">#7825</a>)</li>

<li><a

href="fdb150901a"><code>fdb1509</code></a>

fs: check for io-uring opcode support (<a

href="https://redirect.github.com/tokio-rs/tokio/issues/7815">#7815</a>)</li>

<li><a

href="426a562780"><code>426a562</code></a>

rt: remove <code>allow(dead_code)</code> after <code>JoinSet</code>

stabilization (<a

href="https://redirect.github.com/tokio-rs/tokio/issues/7826">#7826</a>)</li>

<li><a

href="e3b89bbefa"><code>e3b89bb</code></a>

chore: prepare Tokio v1.49.0 (<a

href="https://redirect.github.com/tokio-rs/tokio/issues/7824">#7824</a>)</li>

<li><a

href="4f577b84e9"><code>4f577b8</code></a>

Merge 'tokio-1.47.3' into 'master'</li>

<li><a

href="f320197693"><code>f320197</code></a>

chore: prepare Tokio v1.47.3 (<a

href="https://redirect.github.com/tokio-rs/tokio/issues/7823">#7823</a>)</li>

<li><a

href="ea6b144cd1"><code>ea6b144</code></a>

ci: freeze rustc on nightly-2025-01-25 in <code>netlify.toml</code> (<a

href="https://redirect.github.com/tokio-rs/tokio/issues/7652">#7652</a>)</li>

<li>Additional commits viewable in <a

href="https://github.com/tokio-rs/tokio/compare/tokio-stream-0.1.17...tokio-stream-0.1.18">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

Bumps [clap_complete](https://github.com/clap-rs/clap) from 4.5.57 to

4.5.64.

<details>

<summary>Commits</summary>

<ul>

<li><a

href="e115243369"><code>e115243</code></a>

chore: Release</li>

<li><a

href="d4c34fa2b8"><code>d4c34fa</code></a>

docs: Update changelog</li>

<li><a

href="ab4f438860"><code>ab4f438</code></a>

Merge pull request <a

href="https://redirect.github.com/clap-rs/clap/issues/6203">#6203</a>

from jpgrayson/fix/zsh-space-after-dir-completions</li>

<li><a

href="5571b83c8a"><code>5571b83</code></a>

fix(complete): Trailing space after zsh directory completions</li>

<li><a

href="06a2311586"><code>06a2311</code></a>

chore: Release</li>

<li><a

href="bed131f7ae"><code>bed131f</code></a>

docs: Update changelog</li>

<li><a

href="a61c53e6dd"><code>a61c53e</code></a>

Merge pull request <a

href="https://redirect.github.com/clap-rs/clap/issues/6202">#6202</a>

from iepathos/6201-symlink-path-completions</li>

<li><a

href="c3b440570e"><code>c3b4405</code></a>

fix(complete): Follow symlinks in path completion</li>

<li><a

href="a794395340"><code>a794395</code></a>

test(complete): Add symlink path completion tests</li>

<li><a

href="ca0aeba31f"><code>ca0aeba</code></a>

chore: Release</li>

<li>Additional commits viewable in <a

href="https://github.com/clap-rs/clap/compare/clap_complete-v4.5.57...clap_complete-v4.5.64">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

Clicking the transcript copy pill or pressing the copy shortcut now

copies the selected transcript text and clears the highlight.

Show transient footer feedback ("Copied"/"Copy failed") after a copy

attempt, with logic in transcript_copy_action to keep app.rs smaller and

closer to tui for long-term diffs.

Update footer snapshots and add tiny unit tests for feedback expiry.

https://github.com/user-attachments/assets/c36c8163-11c5-476b-b388-e6fbe0ff6034

When the selection ends on the last visible row, the copy affordance had

no space below and never rendered. Fall back to placing it above (or on

the same row for 1-row viewports) and add a regression test.

Mouse/trackpad scrolling in tui2 applies deltas in visual lines, but the

transcript scroll state was anchored only to CellLine entries.

When a 1-line scroll landed on the synthetic inter-cell Spacer row

(inserted between non-continuation cells),

`TranscriptScroll::anchor_for` would skip that row and snap back to the

adjacent cell line. That makes the resolved top offset unchanged for

small/coalesced scroll deltas, so scrolling appears to get stuck right

before certain cells (commonly user prompts and command output cells).

Fix this by making spacer rows a first-class scroll anchor:

- Add `TranscriptScroll::ScrolledSpacerBeforeCell` and resolve it back

to the spacer row index when present.

- Update `anchor_for`/`scrolled_by` to preserve spacers instead of

skipping them.

- Treat the new variant as "already anchored" in

`lock_transcript_scroll_to_current_view`.

Tests:

- cargo test -p codex-tui2

## Summary

Forked repositories inherit GitHub Actions workflows including scheduled

ones. This causes:

1. **Wasted Actions minutes** - Scheduled workflows run on forks even

though they will fail

2. **Failed runs** - Workflows requiring `CODEX_OPENAI_API_KEY` fail

immediately on forks

3. **Noise** - Fork owners see failed workflow runs they didn't trigger

This PR adds `if: github.repository == 'openai/codex'` guards to

workflows that should only run on the upstream repository.

### Affected workflows

| Workflow | Trigger | Issue |

|----------|---------|-------|

| `rust-release-prepare` | `schedule: */4 hours` | Runs 6x/day on every

fork |

| `close-stale-contributor-prs` | `schedule: daily` | Runs daily on

every fork |

| `issue-deduplicator` | `issues: opened` | Requires

`CODEX_OPENAI_API_KEY` |

| `issue-labeler` | `issues: opened` | Requires `CODEX_OPENAI_API_KEY` |

### Note

`cla.yml` already has this guard (`github.repository_owner ==

'openai'`), so it was not modified.

## Test plan

- [ ] Verify workflows still run correctly on `openai/codex`

- [ ] Verify workflows are skipped on forks (can check via Actions tab

on any fork)

The transcript viewport draws every frame. Ratatui's Line::render_ref

does grapheme segmentation and span layout, so repeated redraws can burn

CPU during streaming even when the visible transcript hasn't changed.

Introduce TranscriptViewCache to reduce per-frame work:

- WrappedTranscriptCache memoizes flattened+wrapped transcript lines per

width, appends incrementally as new cells arrive, and rebuilds on width

change, truncation (backtrack), or transcript replacement.

- TranscriptRasterCache caches rasterized rows (Vec<Cell>) per line

index and user-row styling; redraws copy cells instead of rerendering

spans.

The caches are width-scoped and store base transcript content only;

selection highlighting and copy affordances are applied after drawing.

User rows include the row-wide base style in the cached raster.

Refactor transcript_render to expose append_wrapped_transcript_cell for

incremental building and add a test that incremental append matches the

full build.

Add docs/tui2/performance-testing.md as a playbook for macOS sample

profiles and hotspot greps.

Expand transcript_view_cache tests to cover rebuild conditions, raster

equivalence vs direct rendering, user-row caching, and eviction.

Test: cargo test -p codex-tui2

last token count in context manager is initialized to 0. Gets populated

only on events from server.

This PR populates it on resume so we can decide if we need to compact or

not.

This reduces unnecessary frame scheduling in codex-tui2.

Changes:

- Gate redraw scheduling for streaming deltas when nothing visible

changes.

- Avoid a redraw feedback loop from footer transcript UI state updates.

Why:

- Streaming deltas can arrive at very high frequency; redrawing on every

delta can drive a near-constant render loop.

- BottomPane was requesting another frame after every Draw even when the

derived transcript UI state was unchanged.

Testing:

- cargo test -p codex-tui2

Manual sampling:

- sample "$(pgrep -n codex-tui2)" 3 -file

/tmp/tui2.idle.after.sample.txt

- sample "$(pgrep -n codex-tui2)" 3 -file

/tmp/tui2.streaming.after.sample.txt

This eliminates redundant user documentation and allows us to focus our

documentation investments.

I left tombstone files for most of the existing ".md" docs files to

avoid broken links. These now contain brief links to the developers docs

site.

This is more future-proof if we ever decide to add additional Sandbox

Users for new functionality

This also moves some more user-related code into a new file for code

cleanliness

Bumps [regex-lite](https://github.com/rust-lang/regex) from 0.1.7 to

0.1.8.

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a

href="https://github.com/rust-lang/regex/blob/master/CHANGELOG.md">regex-lite's

changelog</a>.</em></p>

<blockquote>

<h1>0.1.80</h1>

<ul>

<li>[PR <a

href="https://redirect.github.com/rust-lang/regex/issues/292">#292</a>](<a

href="https://redirect.github.com/rust-lang/regex/pull/292">rust-lang/regex#292</a>):

Fixes bug <a

href="https://redirect.github.com/rust-lang/regex/issues/291">#291</a>,

which was introduced by PR <a

href="https://redirect.github.com/rust-lang/regex/issues/290">#290</a>.</li>

</ul>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a

href="140f8949da"><code>140f894</code></a>

regex-lite-0.1.8</li>

<li><a

href="27d6d65263"><code>27d6d65</code></a>

1.12.1</li>

<li><a

href="85398ad500"><code>85398ad</code></a>

changelog: 1.12.1</li>

<li><a

href="764efbd305"><code>764efbd</code></a>

api: tweak the lifetime of <code>Captures::get_match</code></li>

<li><a

href="ee6aa55e01"><code>ee6aa55</code></a>

rure-0.2.4</li>

<li><a

href="42076c6bca"><code>42076c6</code></a>

1.12.0</li>

<li><a

href="aef2153e31"><code>aef2153</code></a>

deps: bump to regex-automata 0.4.12</li>

<li><a

href="459dbbeaa9"><code>459dbbe</code></a>

regex-automata-0.4.12</li>

<li><a

href="610bf2d76e"><code>610bf2d</code></a>

regex-syntax-0.8.7</li>

<li><a

href="7dbb384dd0"><code>7dbb384</code></a>

changelog: 1.12.0</li>

<li>Additional commits viewable in <a

href="https://github.com/rust-lang/regex/compare/regex-lite-0.1.7...regex-lite-0.1.8">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

Bumps [toml_edit](https://github.com/toml-rs/toml) from 0.23.7 to

0.24.0+spec-1.1.0.

<details>

<summary>Commits</summary>

<ul>

<li><a

href="2e09401567"><code>2e09401</code></a>

chore: Release</li>

<li><a

href="e32c7a2f9b"><code>e32c7a2</code></a>

chore: Release</li>

<li><a

href="df1c3286de"><code>df1c328</code></a>

docs: Update changelog</li>

<li><a

href="b826cf4914"><code>b826cf4</code></a>

feat(edit)!: Allow <code>set_position(None)</code> (<a

href="https://redirect.github.com/toml-rs/toml/issues/1080">#1080</a>)</li>

<li><a

href="8043f20af7"><code>8043f20</code></a>

feat(edit)!: Allow <code>set_position(None)</code></li>

<li><a

href="a02c0db59f"><code>a02c0db</code></a>

feat: Support TOML 1.1 (<a

href="https://redirect.github.com/toml-rs/toml/issues/1079">#1079</a>)</li>

<li><a

href="5cfb838b15"><code>5cfb838</code></a>

feat(edit): Support TOML 1.1</li>

<li><a

href="1eb4d606d3"><code>1eb4d60</code></a>

feat(toml): Support TOML 1.1</li>

<li><a

href="695d7883d8"><code>695d788</code></a>

feat(edit)!: Multi-line inline tables with trailing commas</li>

<li><a

href="cc4f7acd94"><code>cc4f7ac</code></a>

feat(toml): Multi-line inline tables with trailing commas</li>

<li>Additional commits viewable in <a

href="https://github.com/toml-rs/toml/compare/v0.23.7...v0.24.0">compare

view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't

alter it yourself. You can also trigger a rebase manually by commenting

`@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits

that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after

your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge

and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating

it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all

of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop

Dependabot creating any more for this major version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop

Dependabot creating any more for this minor version (unless you reopen

the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop

Dependabot creating any more for this dependency (unless you reopen the

PR or upgrade to it yourself)

</details>

Signed-off-by: dependabot[bot] <support@github.com>

Co-authored-by: dependabot[bot] <49699333+dependabot[bot]@users.noreply.github.com>

I attempted to build codex on LoongArch Linux and encountered

compilation errors.

After investigation, the errors were traced to certain `windows-sys`

features

which rely on platform-specific cfgs that only support x86 and aarch64.

With this change applied, the project now builds and runs successfully

on my

platform:

- OS: AOSC OS (loongarch64)

- Kernel: Linux 6.17

- CPU: Loongson-3A6000

Please let me know if this approach is reasonable, or if there is a

better way

to support additional platforms.

### What

Builds on #8293.

Add `additional_details`, which contains the upstream error message, to

relevant structures used to pass along retryable `StreamError`s.

Uses the new TUI status indicator's `details` field (shows under the

status header) to display the `additional_details` error to the user on

retryable `Reconnecting...` errors. This adds clarity for users for

retryable errors.

Will make corresponding change to VSCode extension to show

`additional_details` as expandable from the `Reconnecting...` cell.

Examples:

<img width="1012" height="326" alt="image"

src="https://github.com/user-attachments/assets/f35e7e6a-8f5e-4a2f-a764-358101776996"

/>

<img width="1526" height="358" alt="image"

src="https://github.com/user-attachments/assets/0029cbc0-f062-4233-8650-cc216c7808f0"

/>

This PR introduces a `codex-utils-cargo-bin` utility crate that

wraps/replaces our use of `assert_cmd::Command` and

`escargot::CargoBuild`.

As you can infer from the introduction of `buck_project_root()` in this

PR, I am attempting to make it possible to build Codex under

[Buck2](https://buck2.build) as well as `cargo`. With Buck2, I hope to

achieve faster incremental local builds (largely due to Buck2's

[dice](https://buck2.build/docs/insights_and_knowledge/modern_dice/)

build strategy, as well as benefits from its local build daemon) as well

as faster CI builds if we invest in remote execution and caching.

See

https://buck2.build/docs/getting_started/what_is_buck2/#why-use-buck2-key-advantages

for more details about the performance advantages of Buck2.

Buck2 enforces stronger requirements in terms of build and test

isolation. It discourages assumptions about absolute paths (which is key

to enabling remote execution). Because the `CARGO_BIN_EXE_*` environment

variables that Cargo provides are absolute paths (which

`assert_cmd::Command` reads), this is a problem for Buck2, which is why

we need this `codex-utils-cargo-bin` utility.

My WIP-Buck2 setup sets the `CARGO_BIN_EXE_*` environment variables

passed to a `rust_test()` build rule as relative paths.

`codex-utils-cargo-bin` will resolve these values to absolute paths,

when necessary.

---

[//]: # (BEGIN SAPLING FOOTER)

Stack created with [Sapling](https://sapling-scm.com). Best reviewed

with [ReviewStack](https://reviewstack.dev/openai/codex/pull/8496).

* #8498

* __->__ #8496

Clamp frame draw notifications in the `FrameRequester` scheduler so we